Kubernetes 02 (YAML ,labels, Replication controller , service , types of service) with real usecase

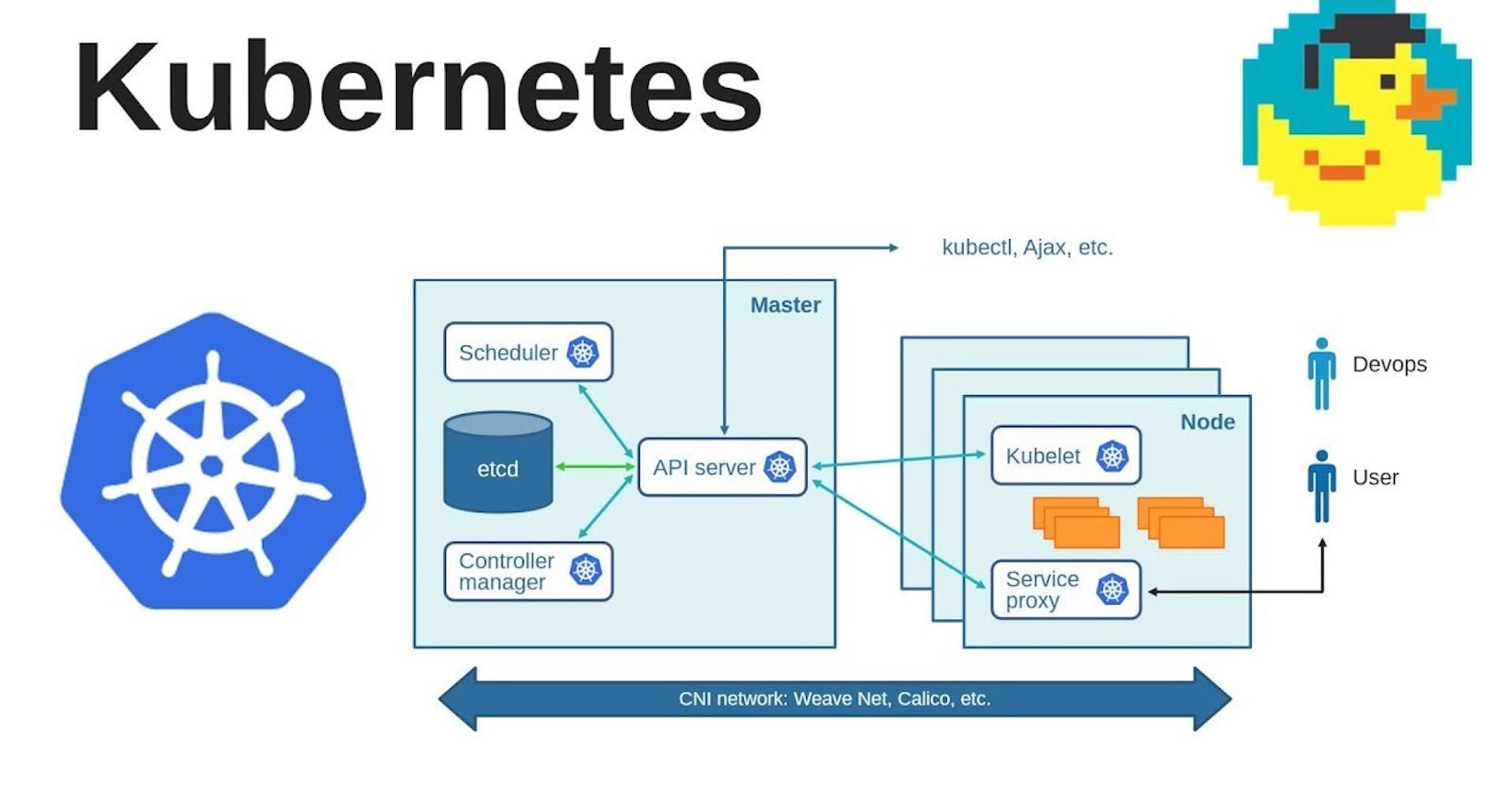

kubernetes controller

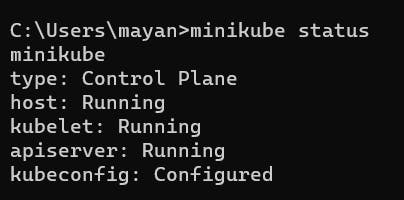

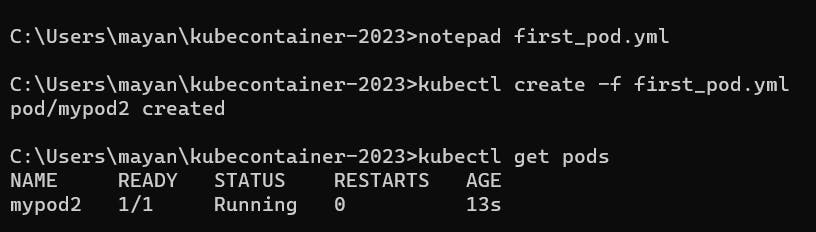

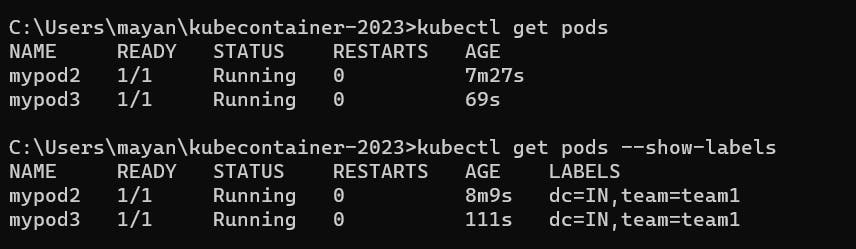

first check the status

resource example- pod and load balancer

if you want to connect one resource to another there the role of label come

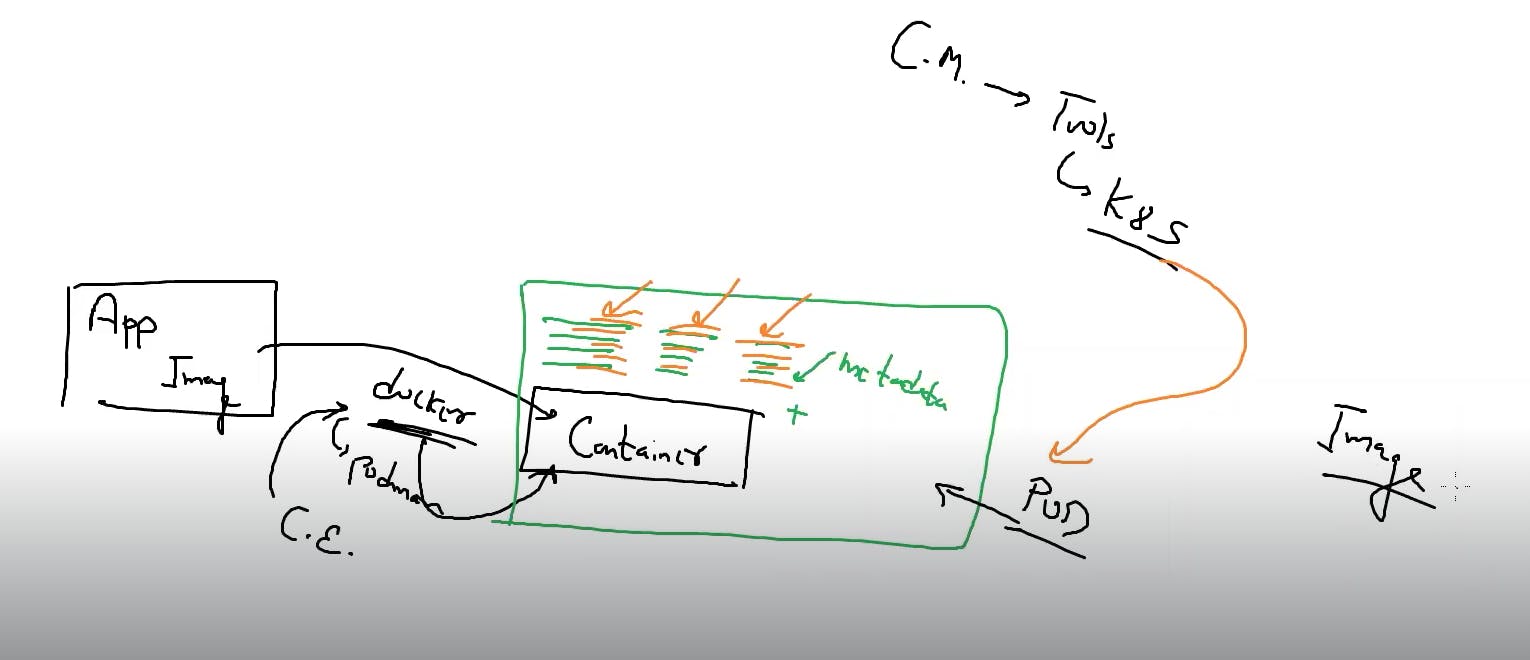

kubernete is not a container engine it just manage the containers and for this it add some extra information with the container that is metadata. container+metadata pod

like we have a app inside a image and docker (container engine ) launch the container

from now onwords with the help of image we directly launch the pod

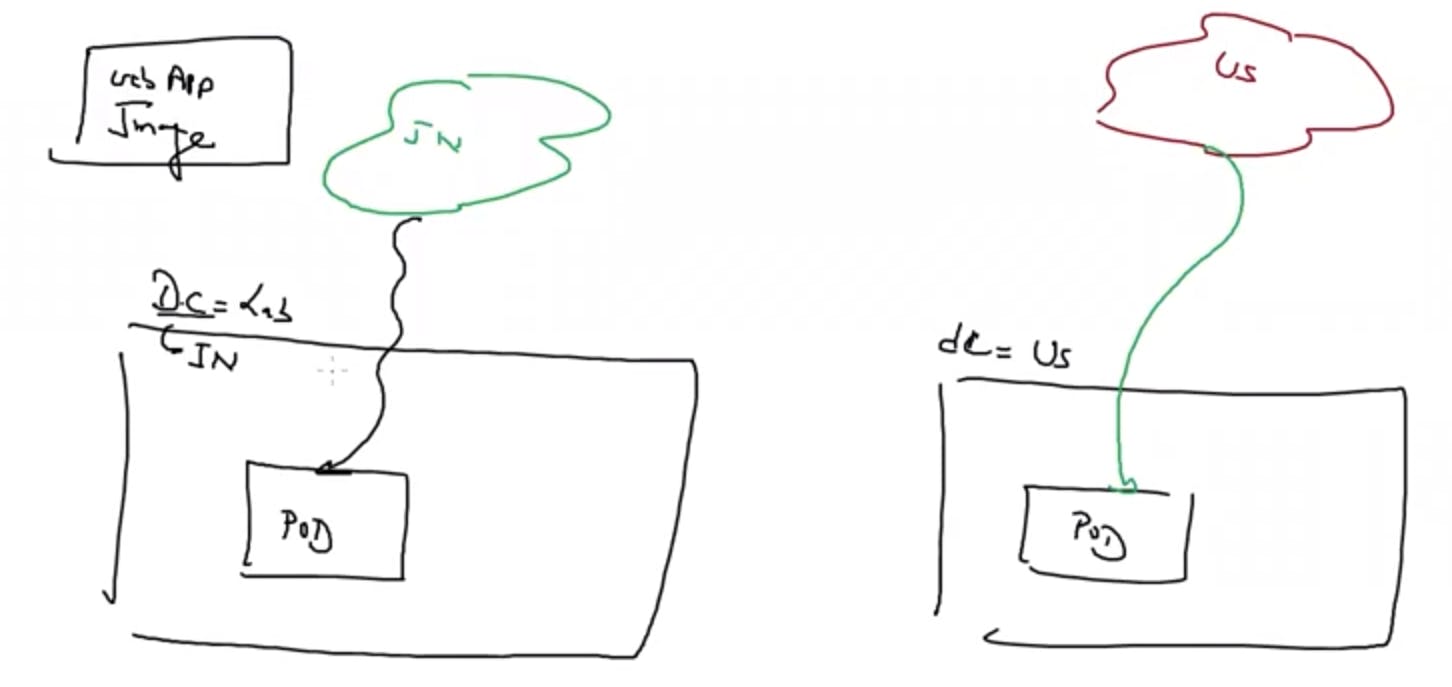

different pods are launch in different region so as the client from differrent region can access the pod launched in their region

like different different company have mulitple datacenters multiple team multiple different region

when we put an app inside an image then this is known as containerised APP

like here we launch on pod in India and one pod in US and in india my my team T1 and T2 are working in which team T1 launch 50 pod and T2 launch 2 pods while in US T1 team launch 3 pods and team T2 launch 25 pods like this we have 1000 pods running we have single kubernetes cluster for whole company means all the teams are using same cluster and if you run

kubectl get pods then it show all the pods that you are running that is 1000 pods and if some team run some pod having critical information then issue of security came which is resolved by Role based access control --> namespace in which critical information is seen by that team only.

In simple terms 100s of pods running how can you search particular pod how we can search as T1 team is in india also and T1 in US also . so what we do is search the pods running in us region then T1 that type of filtering we are going to done

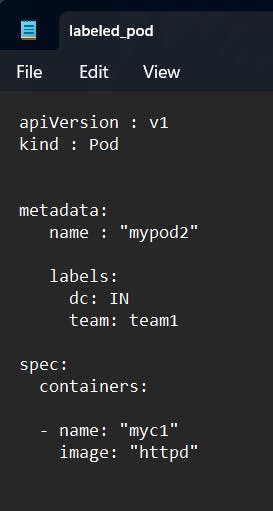

so while launching the pod we are tagging you technically we give the label that is key value pair

we clearly see that their is no label given

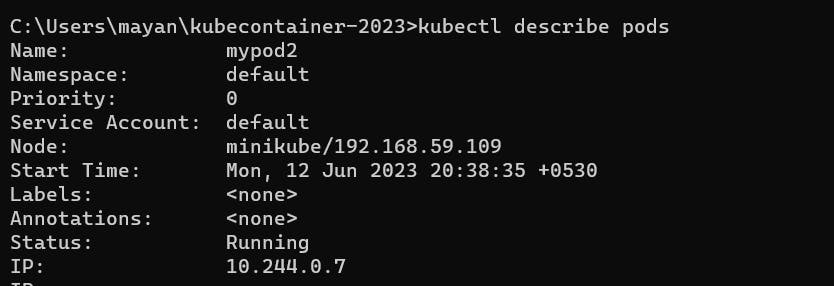

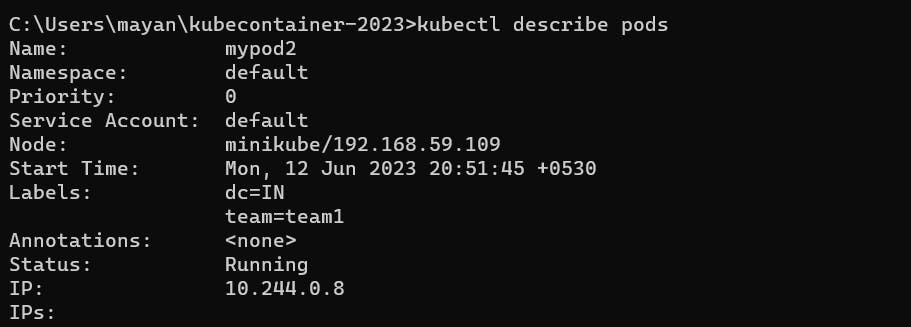

when you describe you will check kubectl describe pods

we clearly see the labels as none but after setting the label we see it

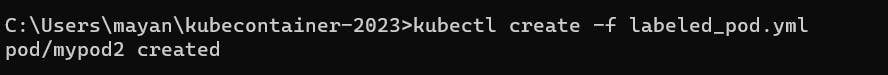

lets delete it and launch the pods with labels

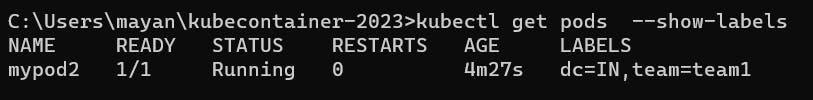

kubectl get pods tell very little information

to check the labels through get command

kubectl get pods --show-labels

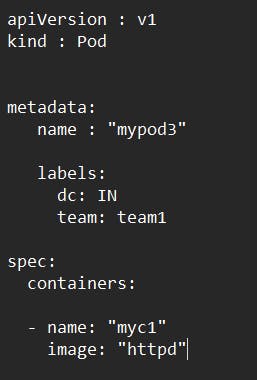

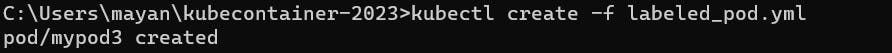

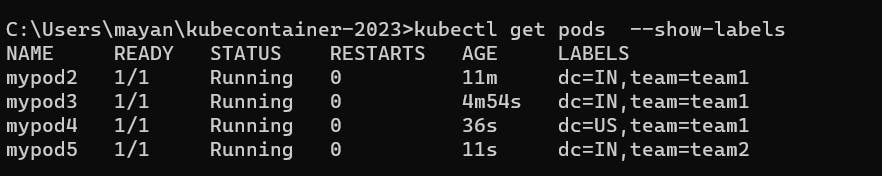

let launch more pods by editing same file

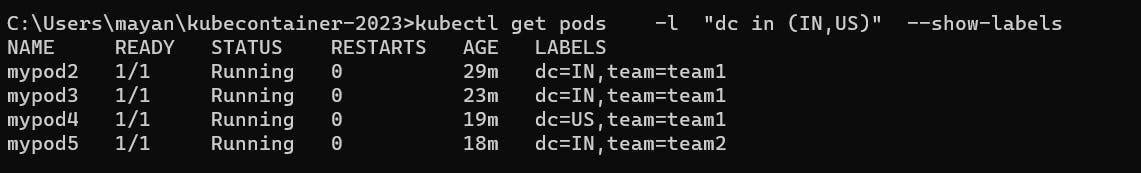

so we have launched four pods running with labels

but why we set the labels one of the reason is search

In Kubernetes world search is called as selector means technically we are searching

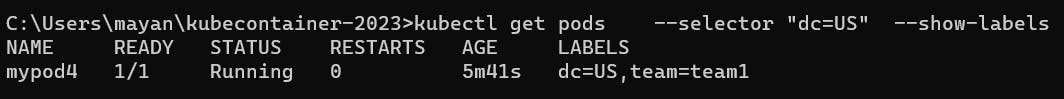

use : kubectl get pods --selector "dc=US" --show-labels

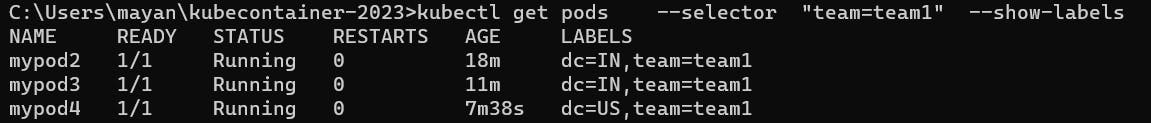

command : kubectl get pods --selector "team=team1" --show-labels

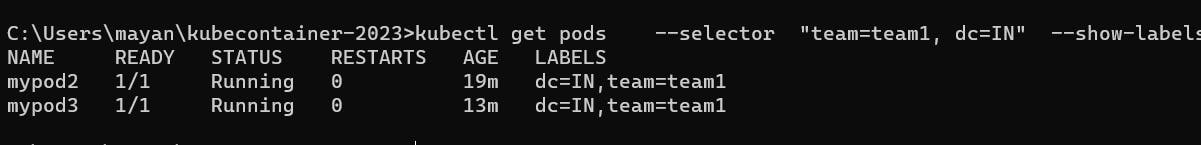

command : kubectl get pods --selector "team=team1, dc=IN" --show-labels

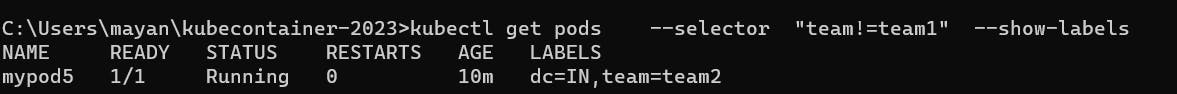

it also support logical operation ( equality based example)

kubectl get pods --selector "team!=team1" --show-labels

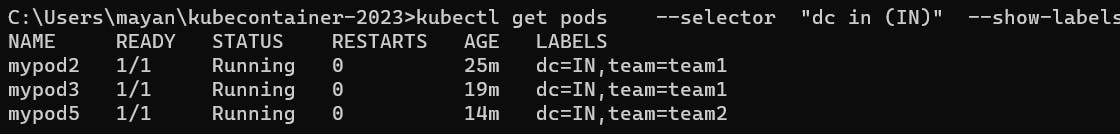

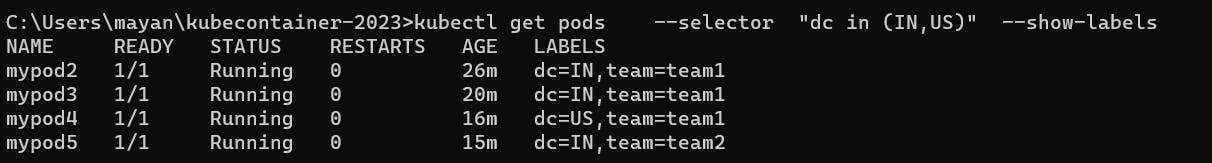

if we write in more expressive way like a human language (set based example)

kubectl get pods --selector "dc in (IN)" --show-labels

kubectl get pods --selector "dc in (IN,US)" --show-labels

also we can write instead of --selector we use -l

Replication controller:

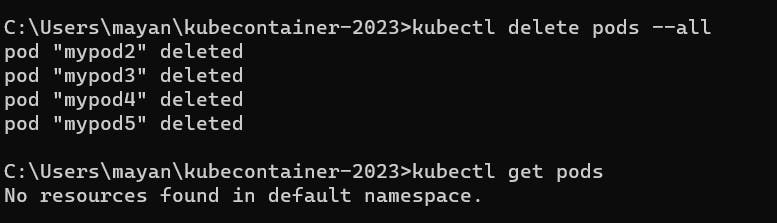

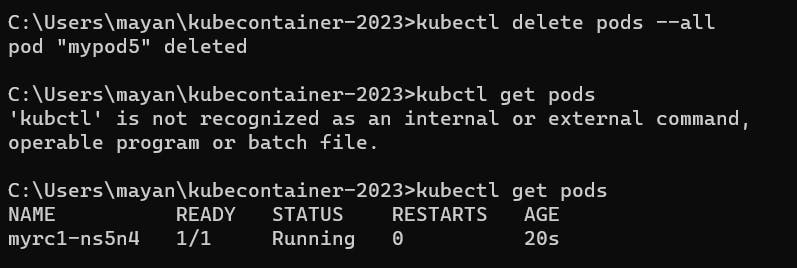

when you launched the pod what kubernetes done is that it continuously monitoring the pod . if any of the pod deleted and It will relaunch the pod but in above kubernetes doesnot relaunch the pod why

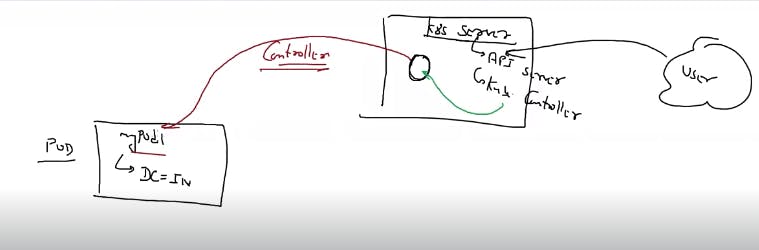

kubernetes have two programs that internally working that is API server and kubecontroller

through API server user client connects and we can tell the kubecontroller that one pod is running with the name mypod1 with label dc=IN you have to keep on monitoring

good thing about kube controller is that we tell this program that we want the particular pod continuously run so we create the replica of that means one copy or two copy or according to requirement thats why it continuously run

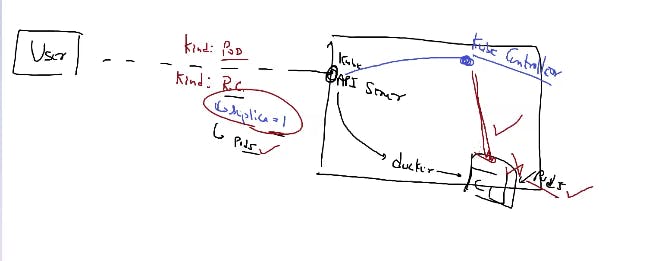

But how kubecontroller know that how many replicas ( copy) we want so for this user have to contact the kube controller to tell the requirement that I want this particular pod to be monitored also tell that how many replicas you want for the pod .

yu are a user you contact to k8s (kubeAPI server) tell your requirement that you want to do something to pod and it request to docker and launch the container but as a user I want to connect with the different program that is replication controller again request goes to API server and request is give to kube controller there we have to tell pod name with label

and tell that where you find these label keep monitor it .

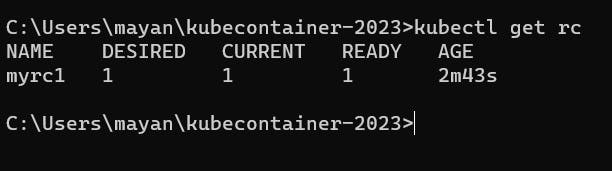

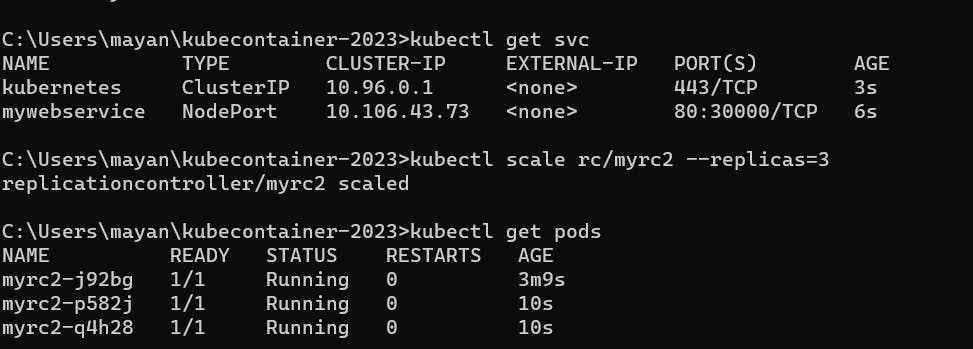

to check the replication controller:

kubectl get replicationcontroller or kubectl get rc

so how we get replication controller

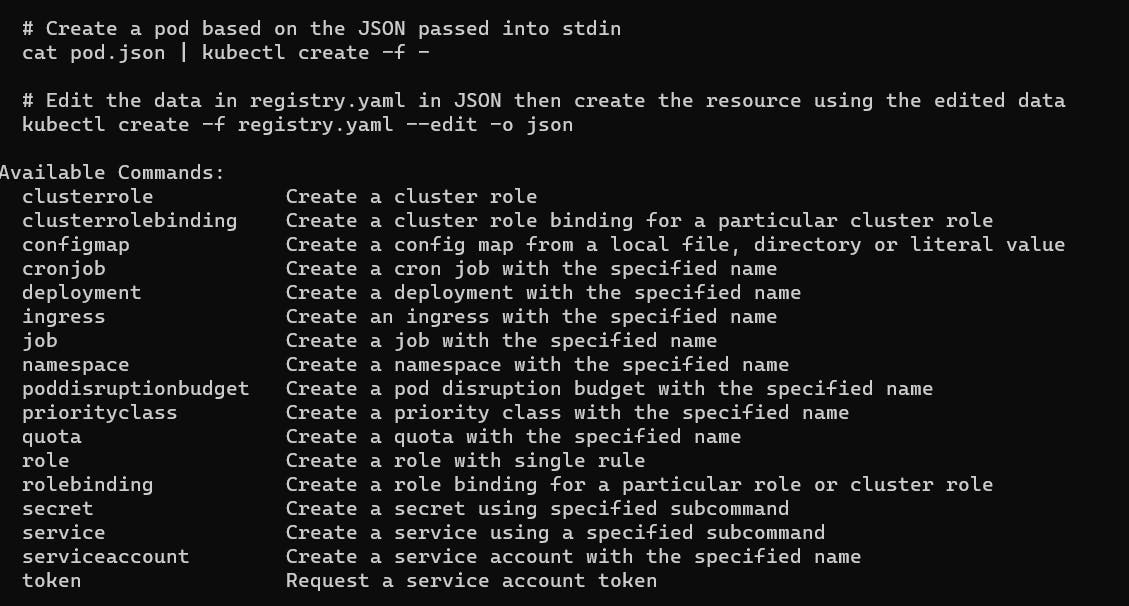

see guys if you don't know the command always see the help section

kubect create -h

so this is the help section which tells the options they support

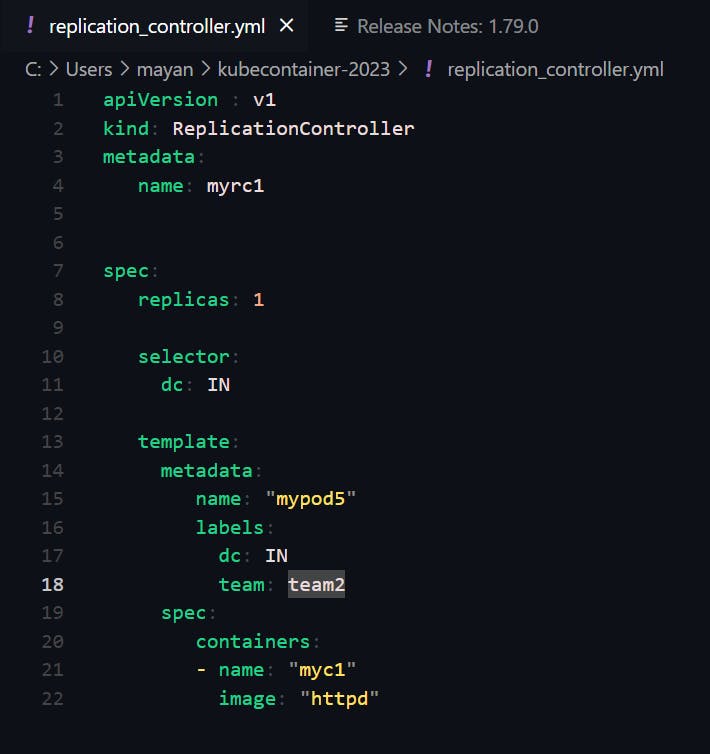

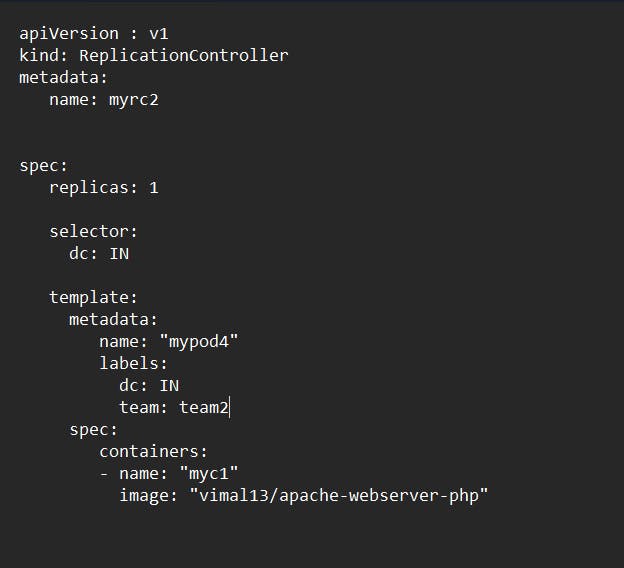

it look like we can't create replication controller with this command so we have to go through the code

go through: https://kubernetes.io/docs/concepts/workloads/controllers/replicaset/

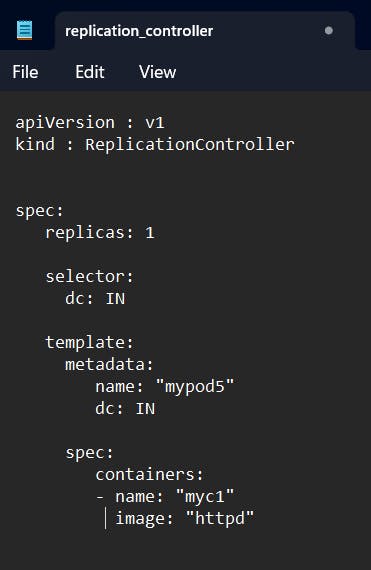

to see the key value pair or the keyword we use in replica controller

it is the code for replication controller

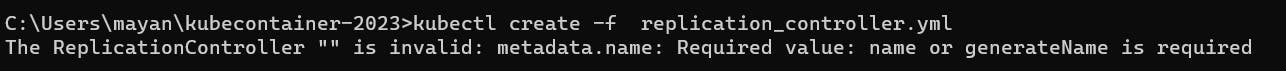

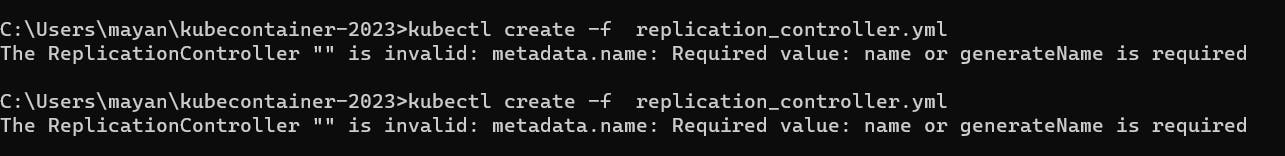

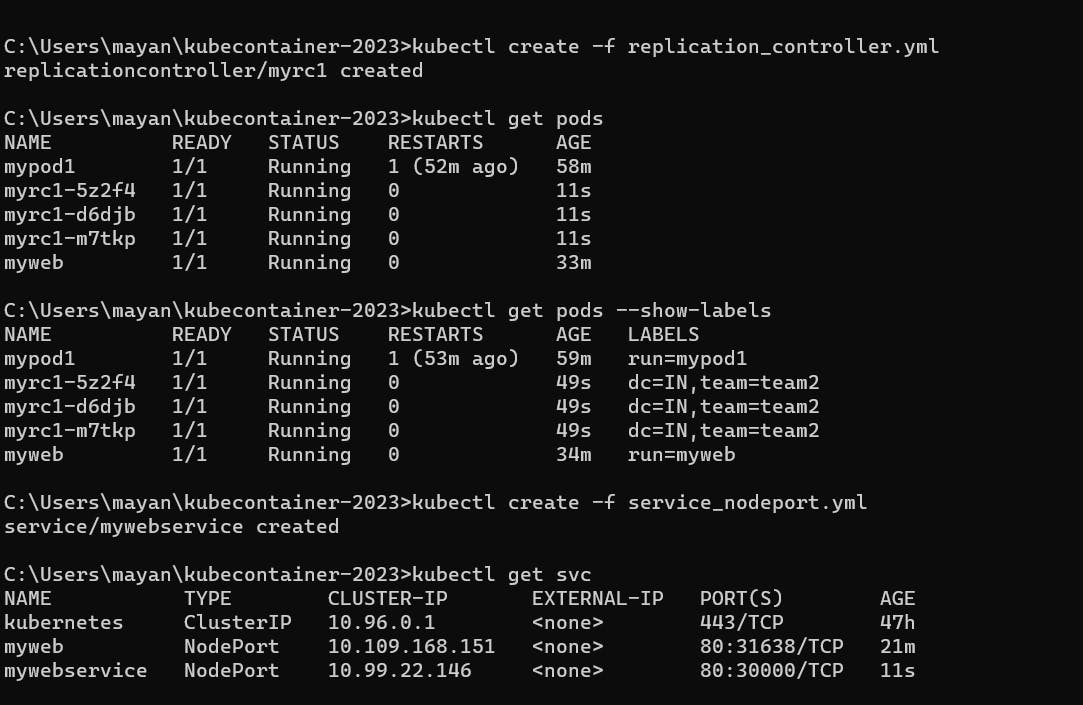

then kubectl create -f replica_controller.yml

errors :

here are the changes we have to do

to check it kubectl get rc

so as soon as i delete the pod it relaunch the pod again by monitoring it

see we have successfully launch the replicas

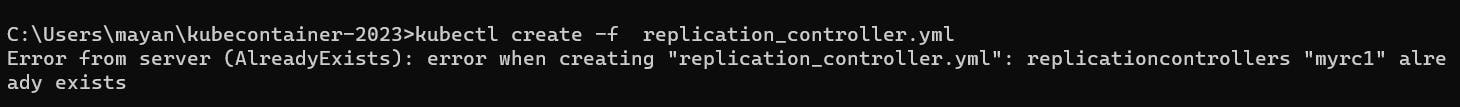

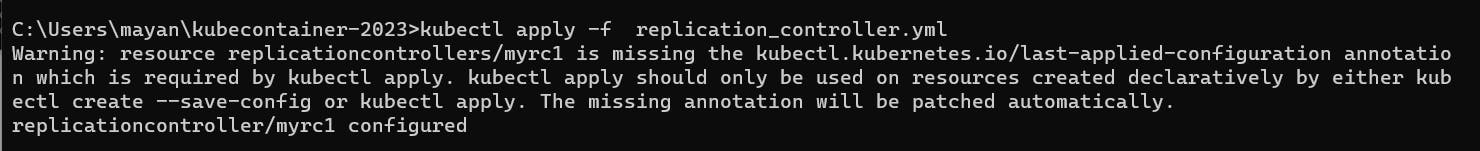

now if we want to some change in kubecontroller file and again run this command then it will show error

so what we do to keep the change so we have to apply it and command is :

kubectl apply -f replication_controller.yml

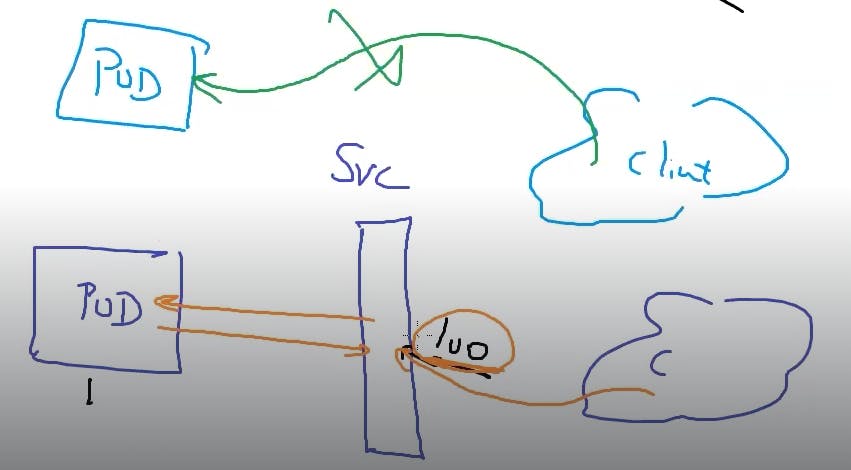

load balancer: let say my company run an app in particular pod with container c1 in which client hit request and sudden traffic in this pod increase. This pod's have container (like a os) with limited RAM and CPU let say this container can handle 100 requests but if more request comes in this then we have to go to kubernetes and tell you are the one who launch the container and i don't wanna waste my client it affect my reputation. so we can take the help of physical hardware to increase physical hardware now server will able to handle more request. and if usage decrease it remove the physical hardware( as needed )by the kubernetes. This approach is called Scaling.

The code for Kubernetes is written in the YAML language which works on a declarative apporoach

Scaling of pods -->

Vertical scaling:-Increasing physical storage such as CPU, RAM, and storage of a server to handle traffic is called vertical scaling.

a) Increasing resources --> scale up

Decreasing resources --> scale down.

b) Disadvantage :-Single point of failure

but this concept we use sometimes but very less in now days. In today's world we use horizontal scaling more .

Horizontal scaling:-Creating similar replicas of the server to handle the traffic is known as horizontal scaling

a) Creating more replicas --> scale out

b) Removing replicas -->scale in

so we have replicas through horizontal scaling which will handle the requests some request given to first replica some goes to second replica and so on .

The question is that who will handle the request sent to particular replica . client give request to intermediatory program which access the real server . for example client request ip 100 this request given to intermediator program it connect to the client with ip 100 . This intermediator program is called proxy program.

This program helps in handling traffic (traffic management)

This traffic mangement is done by the load balancer.

for horizontal scaling we always need load balancer.

Load balancer == service

\>>The load balancer can check the health of the server .

\>> Load balancer works on Round Robin algorithm .

\>>The load balancer also works as a reverse proxy between the client & server.

\>>Scaling of a replication controller oFrom the YAML file

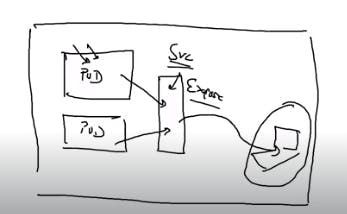

Lets create the service

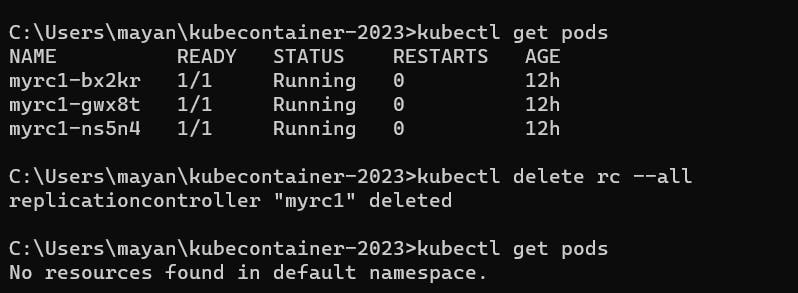

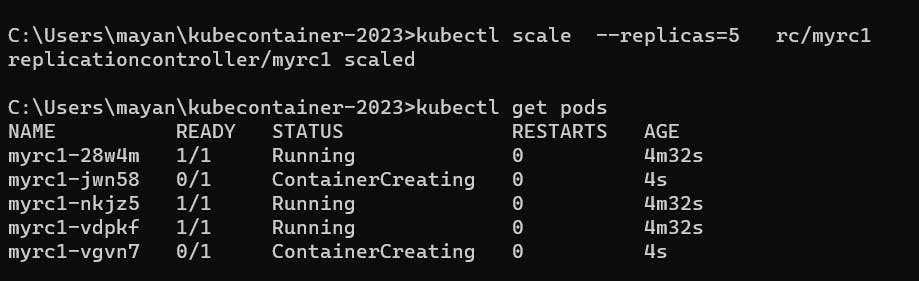

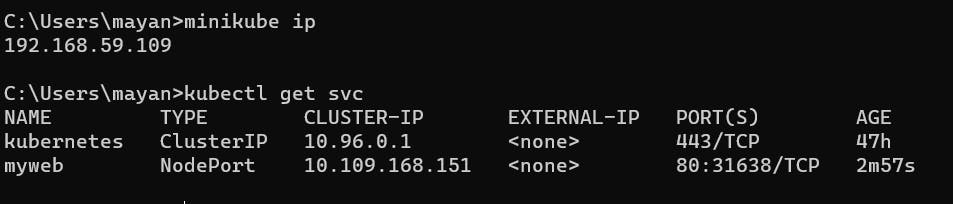

delete the replication controller

to delect the replication controller : kubectl delete rc --all

now we have no pods running

two thing i want

1) doesn't have single point of failure

2) loadbalancing

and if i want to launch two or three or whatever number of pods we can't launch directly

do this with the help of replication controller

scaling of replication controller

kubectl scale --replicas=3 rc/myrc1

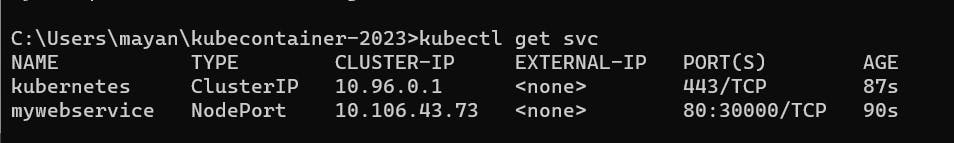

load balancing ==service (svc)

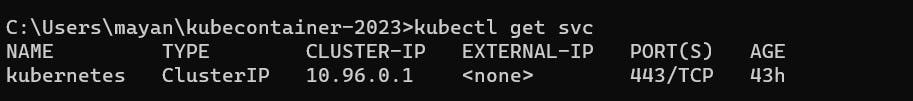

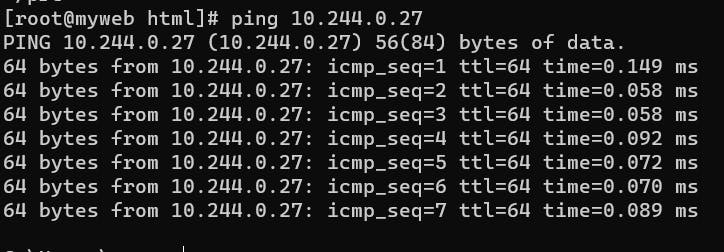

check the service running

we see that some service is already running

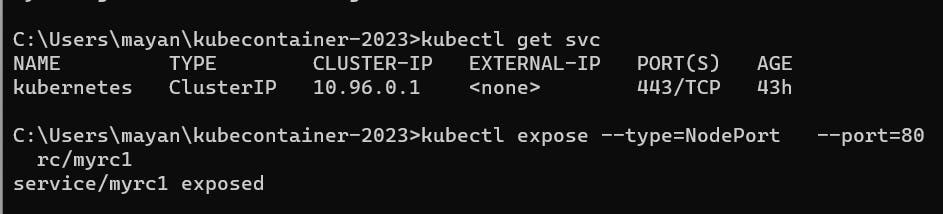

we have to expose the created service

kubectl expose --type=type_of_load_balancer --port=80 rc/name of replication controller

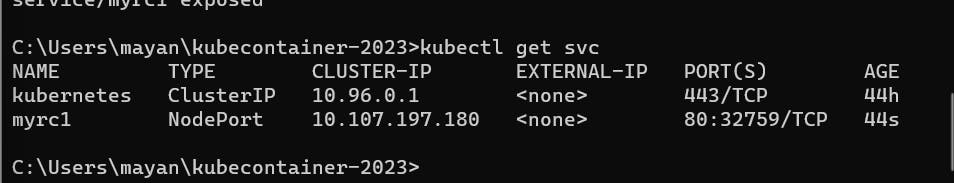

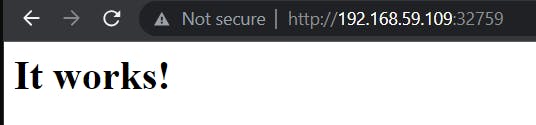

32759 is frontend port

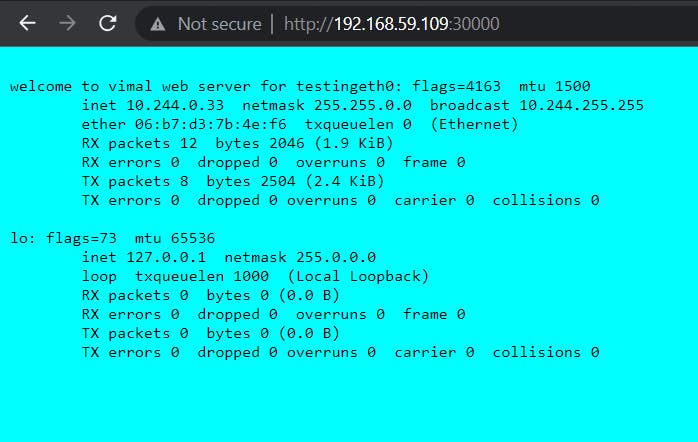

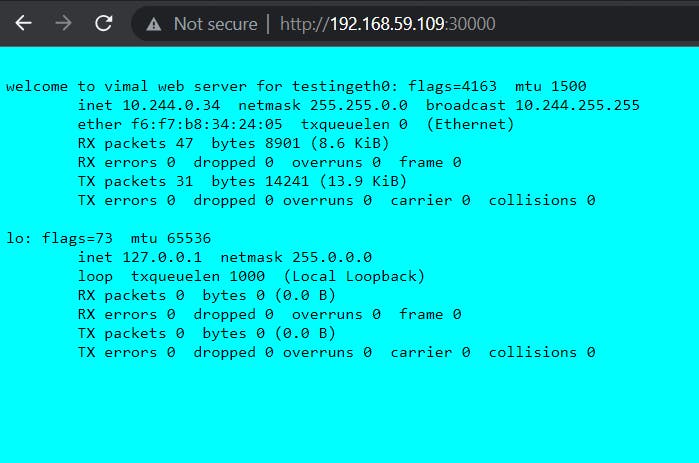

to check whether it works or not

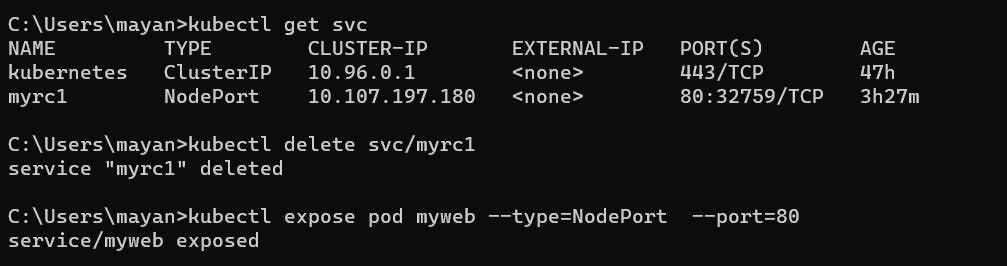

browse it : minikubeip:frontend port

to check minikube ip : minikube ip command is used

frontend port == nodeport that is 32759

when ever some goes down Replication controller launch another pod and then connect to service layer by the service controller

In any of the situation either using load balancer or not good practise to use service.

we saw that client connect the pod directly which is not the good practise

good practise is always create a intermediatery box that is service layer through which the client connect to pod

\>>>One of the drawbacks of a software-definednetworkis that it is not powerful in terms of Performance

\>>>Different types of services in Kubernetes

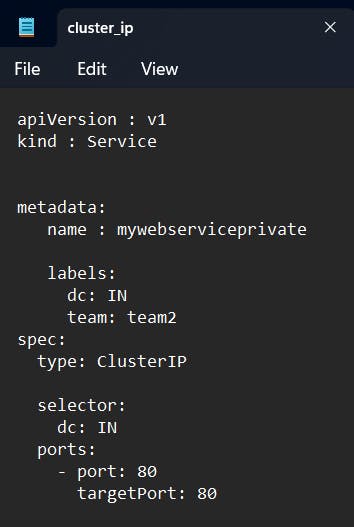

1. Cluster IP ---> It is used when we want the server to be accessed from an internal client

Node port--->It is used when we want our server to be accessible from both internal and external client

---> Similar to PAT / DNating in docker

External--->When we have real physical load-balancing hardware and want to integrate with Kubernetes pods then we use an external type .

-->We can also use a Load balancer from cloud support platforms (Eg Elastic load balancer from AWS )

with the help svc(service ) you will always provided an application to the client thats why it is called as expose . here we are exposing the application to the client

any system that is want connecting with the internet we give public ip

any system that doesn't want connection with the internet we give private ip

which is the most secure operating system in the world

-->The most secure system in the world is the isolated system

so to make the system more secure we should make the system isolated by giving private ip instead we also provide firewalls but the most quicker way to secure is private IP.

cluster IP == private ip

now if want the system that is to be accessed by the public and private world both that is patting and dnatting . and it is exactly == Nodeport

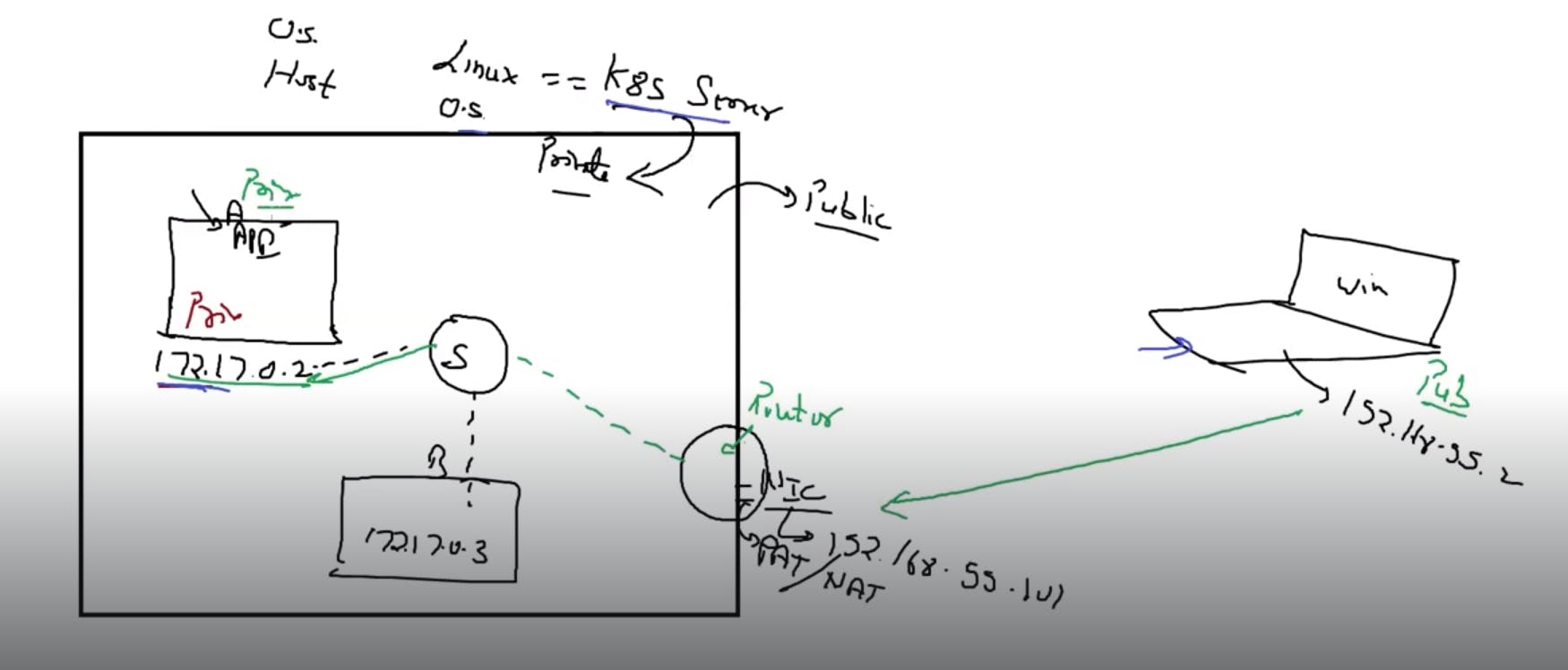

let say my company server run in the minikube vm of virtual box

in this my kubernetes server run so whatever run inside this is private and whatever run outside is public

ip given to each of the pod are private ip given by the docker internally they are connected by the switch . when the client want to be connect the pods by the router using patting or dnatting.

the port number that we give port = 80 ( which is for http server) is the port number for the pod or the container port while the patting that is given in which our http service we work is front end port . This frontend port == service port

that is the reason when we expose it then we have to tell the type of loadbalancer like here i want the services to be accessible by the outside world thats why i use Nodeport

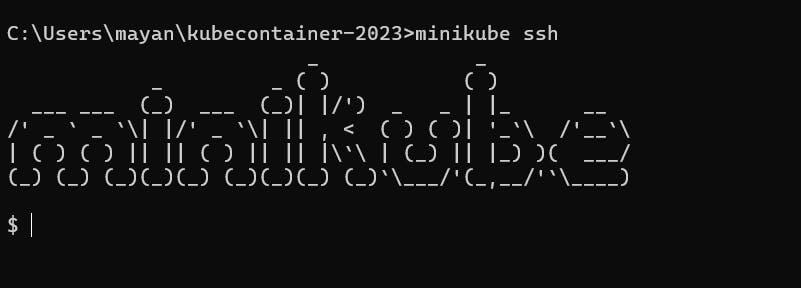

To login to the server of kubernetes cluster by login in minikube of oracle virtual box

username - docker password : tcuser

or in command promt minikube ssh

if kubernetes want to go to the internet it uses eth0 network

when i run kubectl get pods in any of the os and say i am the user of this.

But if you want to maintain the kubernetes server than you have to go inside to the server.

two either manually or command promt

through command prompt use minikube ssh

do whatever you want to do like installing or setup all thing we do by going inside the cluster.

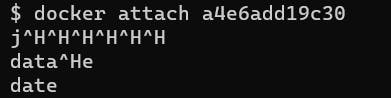

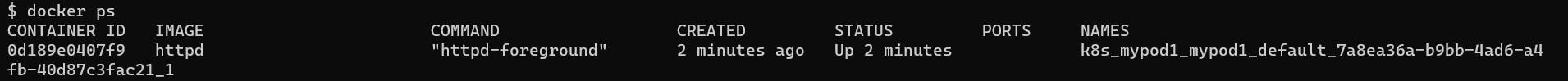

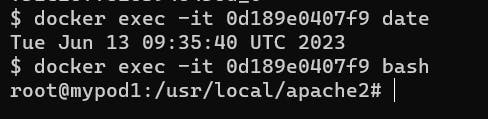

How to go inside the docker container which is running in the kubernetes cluster

\>> login to minikube

\>>command:- docker attach (containerid/name)

since it can't give interactive terminal so we have to use exec command of docker

docker exec -it containerid bash for terminal

this particular container is running so we go inside iit

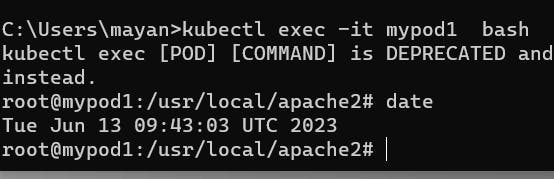

since whatever we do in kubernetes do with the help of kubectl command so we dont wanna learn docker also we can do same thing with the help of kubectl command

kubectl exec -it podname commandname

kubectl exec -it mypod1 -- bash you may also use this also instead of kubectl exec -it mypod1 bash

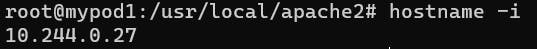

to see the ip of container

ifconfig if it not works use hostname -i

see both have same ip

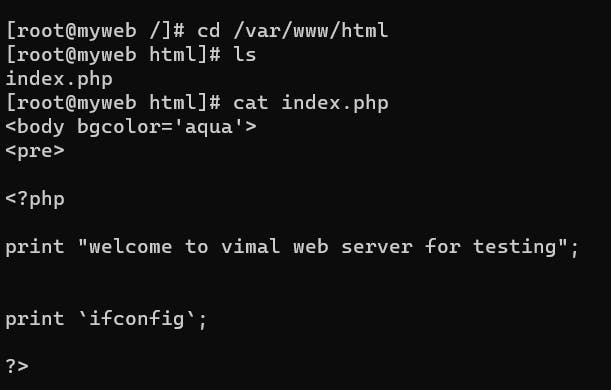

and here is the web page of this image

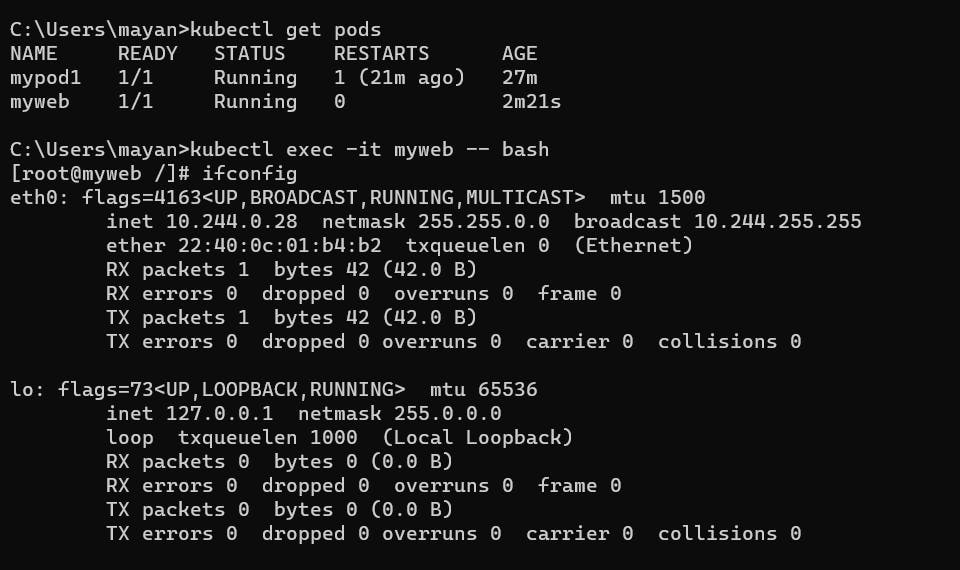

by default every pod has connectivity with each other in a cluster

now how to expose the pod to clients outside the cluster

so anybody from the outside world with portnumber 31638 (see above) kubernetes will connect it to service and expose it to port number 80.

righnow the pod running is not managed by any one so it is not started by anyone so good thing do with the help of replica controller

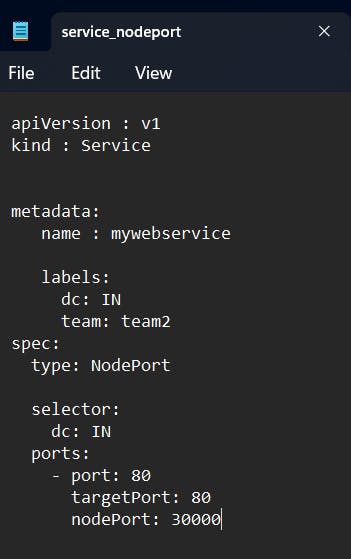

YAML file for load balance

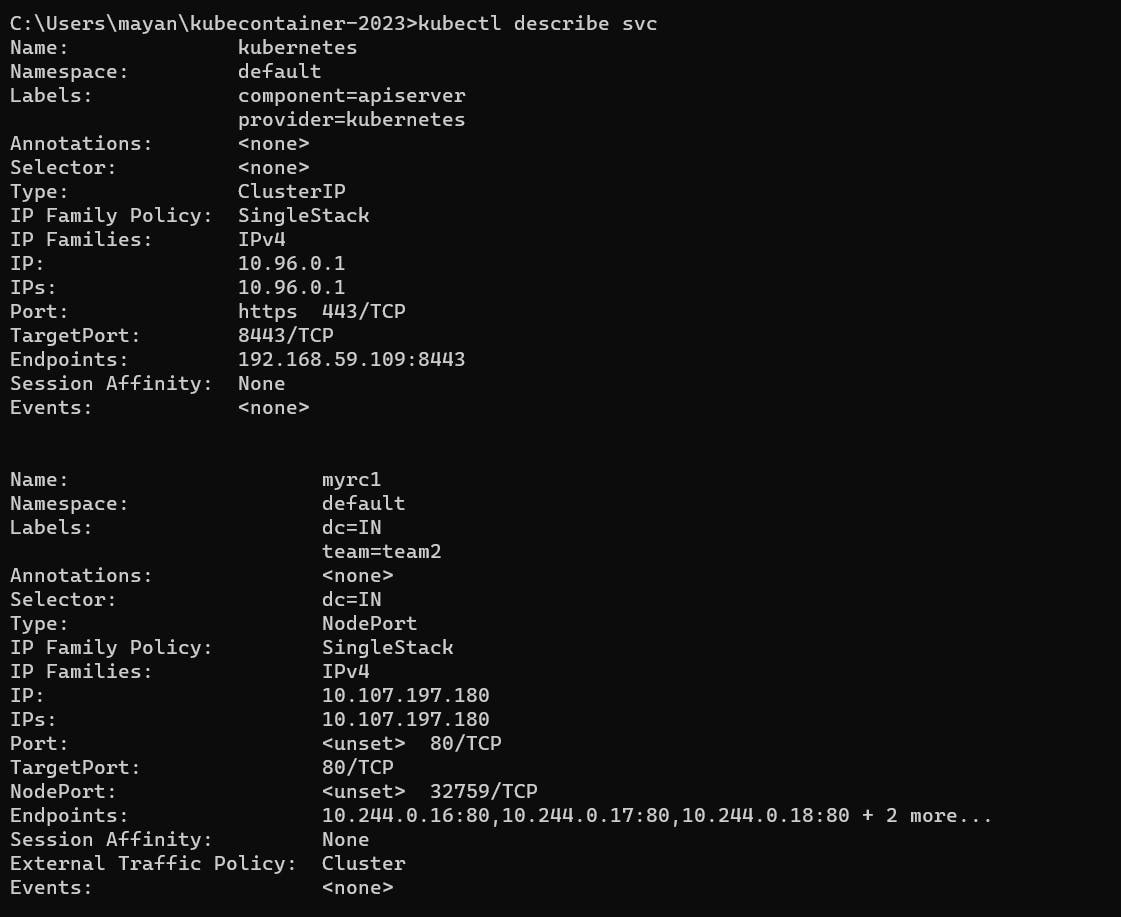

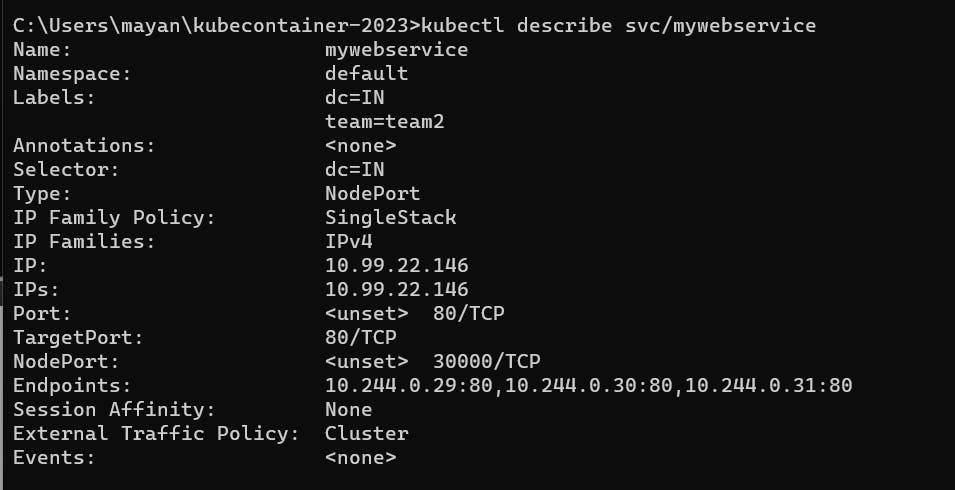

see the endpoints this show it is connected to three pods

see when i hit multiple time in browser with this ports or using curl also you can do it

see the ip is 10.244.0.33

see the ip is 10.244.0.34

means automatically service manage this when hit by the client many times

\>> client connecting to different pods having differnt ip

how to launch the clusterIP load balancer with the YAML file

I hope you guys enjoys the blog do share and like it

want to connect with me 👇👇👇

My Contact Info:

📩Email:- mayank07082001@gmail.com

LinkedIn:- linkedin.com/in/mayank-sharma-devops